How a Building Collapse Scene Shook the Industry

No press release. No AI logo slapped on the screen. Just a collapsing building in the heart of Buenos Aires, and a quiet technological earthquake that followed.

Netflix’s sci-fi series El Eternauta needed a massive VFX moment: a building falling apart in a dystopian skyline. Normally, that would mean weeks of 3D modeling, compositing, simulation, rendering. But this time, the shot was done 10 times faster. The cost? So low it wouldn’t have made sense for traditional VFX.

“AI made this possible,” said Netflix co-CEO Ted Sarandos during a recent earnings call. And for the first time, the company admitted that this wasn’t just a side experiment. It was final production footage, generated using Runway AI.

That single shot didn’t just save time. It broke open a future where low-budget productions can now play in the big leagues. And it signaled one thing loud and clear: generative AI in filmmaking isn’t coming. It’s already rolling.

From Hype to Hands-On: Why Runway Is Beating Sora and Veo in Hollywood

OpenAI’s Sora can turn text into video. Google’s Veo promises cinematic scenes with style transfers and smooth motion. But in the Hollywood trenches, these tools are still warming up backstage.

Runway, meanwhile, is already shooting.

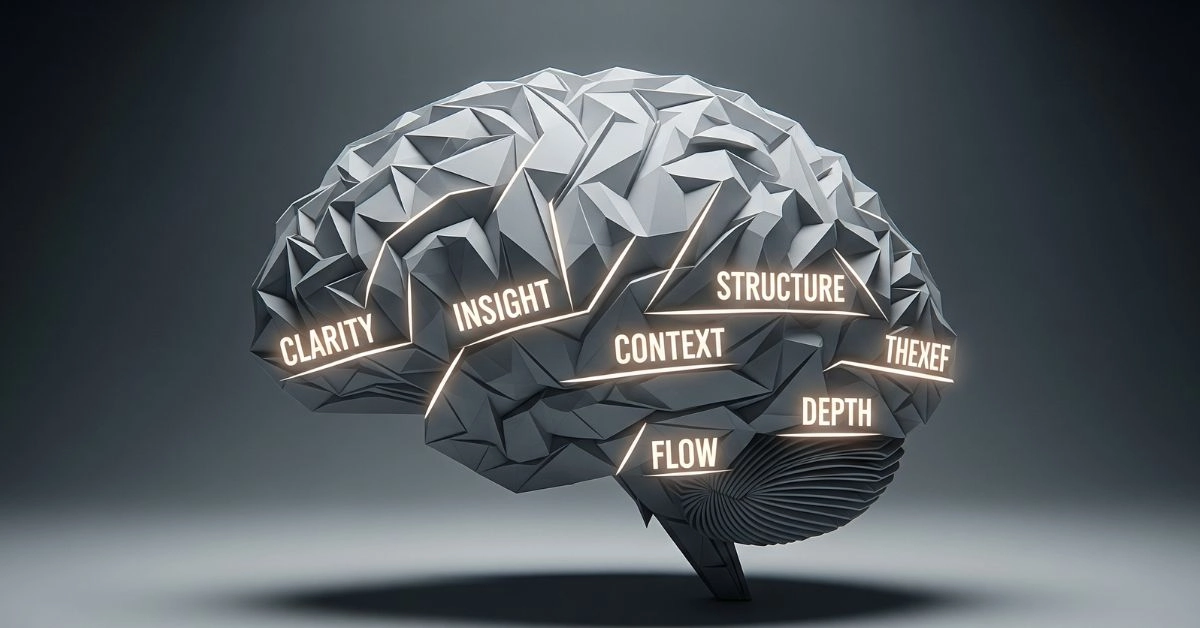

With its Gen-3 Alpha model, Runway introduced short-form video generation from text prompts. Not perfect, but good enough for concepting and pre-vis. Then came Gen-4, a leap in stability and style. And now, with its Act-Two system, the startup is turning raw human movement into animated character motion. It skips expensive mocap sessions altogether.

Lionsgate noticed. The studio signed a deal to let Runway train its models on its content library. This isn’t a test. It’s data-powered prep for real films. And with Netflix already applying the tech in production, the startup suddenly has more Hollywood street cred than its big-tech rivals.

Disney Is Playing Cautious But Interested

While Netflix is going all in, Disney is circling the runway. Eyes wide open, but wheels still up.

Reports say the House of Mouse has been testing Runway’s tools, especially for pre-visualization and set design. But they haven’t confirmed any major use yet. A Disney spokesperson even clarified there were no immediate plans for deployment.

That hesitation makes sense. Disney is still healing from the fallout of the 2023 Hollywood strikes, where AI fears were front and center. It’s also fresh off a copyright lawsuit against Midjourney, another AI startup, over how generative models are trained on studio-owned content.

For a studio that lives on IP, the risk of feeding proprietary data into third-party systems is a red flag. But make no mistake. The curiosity is there. Disney may be cautious now, but it knows it can’t stay on the sidelines forever.

AI Is Saving Time and Budget But at What Cost?

AI can simulate rain, build cities, or fix lighting in a frame in minutes. But it can’t calm the storm brewing in the creative community.

When Netflix says it completed a VFX shot 10 times faster, that means a lot of traditional jobs were skipped. Animators, render artists, compositors — all compressed into a prompt. Sarandos insists AI is “helping real people do real work with better tools,” but for many, that sounds like damage control.

The fear isn’t just about layoffs. It’s about something deeper. Can AI-created footage be trusted in genres like true crime or historical drama? One critic put it bluntly: “If I can’t trust what I’m seeing, what’s the point of storytelling?”

For audiences, these concerns are real. Subtle inconsistencies in AI-generated footage can break immersion. For creators, the worry is creative erosion. A world where vision is guided by algorithms trained on what has worked before, not what could be imagined next.

The Startup Behind It All: Runway’s Billion Dollar Bet

Runway isn’t a new name. But until recently, it was more indie darling than industry disruptor.

Founded in New York, Runway first made waves with its Gen-1 and Gen-2 models, turning video into stylized, AI-assisted edits. But Gen-3 Alpha changed the game. For the first time, it let creators generate video from pure text input, with more control and coherence than earlier models.

Investors took notice. In 2024, Runway raised 308 million dollars in a single round, pushing its valuation past 3 billion. The startup has now raised over half a billion dollars in total, building tools not just for effects but for every part of the pipeline, from script to screen.

Its Act-Two platform now automates motion capture. Its Gen-4 model refines multi-second video creation with more realism. And its deals with Netflix and Lionsgate mean one thing. This isn’t a testbed anymore. It’s part of the machine.

The Bigger Question: Will AI Democratise Storytelling or Dilute It?

In some ways, the answer is already visible.

Indie filmmakers are using Runway to pre-visualize scenes, simulate lighting, and extract green-screen footage in minutes. What once required a 100K dollar VFX team is now being done by two-person crews with a laptop and vision.

But for every story of empowerment, there’s a fear of erosion. If studios overuse generative tools, will content feel generic? If everything looks perfect, will it lose the messiness that gives cinema its soul?

The real battle ahead isn’t between AI and humans. It’s between speed and substance.

Runway’s tech is brilliant. But brilliance isn’t enough. The future of film depends on whether these tools expand creative freedom or quietly replace it.

As Sarandos said, “These tools are helping creators expand the possibilities of storytelling on screen.” The tools are here. The story is unfolding. And for Hollywood, the script is no longer just written by people. It’s co-written by code.